Agent workflow memory

With the rapid adoption of AI agents and their workflows by enterprises and individuals alike in their daily lives, enabling agents to perform complex, long-horizon tasks with complex trajectories has become a significant challenge. The recent paper, “Agent Workflow Memory” introduces a novel approach to address this challenge by equipping agents with a memory mechanism that makes the long-horizon tasks with complex trajectories with higher accuracy.

What are long-horizon tasks?

Before reading more about “Agent Workflow Memory”, let us first try to understand what Long Horizon Tasks are and why exactly it’s something that current AI agents can’t achieve. These tasks are simply categorized as tasks that require the agent to perform a series of actions or decisions over an extended period to achieve a specific goal. Some examples of such tasks are:

- Web navigation: Browsing multiple web pages to retrieve information.

- Game playing: Making strategic moves over many turns in a game environment.

- Task automation: Performing a sequence of operations in software applications.

Example: Long-horizon tasks

Now, these challenges sound simple, with examples like Google efficiently gathering magnitudes of information from trillions of web pages, but that is where the catch is: it is a set of meticulously written code and algorithms that work every time. This brings us to the limitations of the current LLM-based agents for such tasks.

Limitations of current LLM-based agentic workflows

; such which cannot be solved by adding more functions or actions to the agent’s workflow. These limitations shine when the learning sequence for the agent gets extended over multitudes of days, as it is for most pipelines:

- Lack of memory: Once the context window of a particular instance is done, the models cannot act based on previous interactions.

- Inability to plan: Such agents need help creating multi-step reasoning, as they cannot plan every time they are used due to how the model deals with the query.

- Contextual drift: Forget past interactions; such agents sometimes face issues dialing back to your original context (try decoding a JS code on ChatGPT)

These problems are proposed to be solved by using Agent Workflow Memory.

Agent workflow memory (AWM)

The AWM method addresses the significant memory-related limitations of current LLM-based Agents by introducing three major modules in an agentic workflow. Working on the simple inspiration from human workflow, they try to adapt the ability to solve complex tasks by learning reusable task workflows, such as generating image masks on Premier Pro using multiple frames as a reference.

This diagram represents the architecture of an agent-based workflow memory system. The process begins with an environment providing a state (s) to the agent, which continuously observes and responds by taking actions. The first step is to obtain actions, followed by trajectory evaluation to assess if the agent's query or task has been solved. If the query is solved, the process passes successfully. If not, the agent proceeds to induce new workflows, which are integrated into the agent’s memory for future reference. The loop repeats as the agent continually learns and adapts based on memory and environmental inputs, enhancing its performance.

Workflow induction

Offline workflow induction involves analyzing past data to identify common patterns and workflows. In contrast, online induction happens in real time, where the agent dynamically recognizes new workflows during task execution based on the current context and actions.

Memory retrieval module

Workflow memory utilizes a structured storage system that allows for efficient retrieval. The agent selectively retrieves workflows relevant to the current task or context, improving task performance.

LLM integration

The agent leverages retrieved workflows to guide decision-making, selecting actions that align with past successes. With its LLM's vast knowledge and the retrieved data, it can also adapt these workflows to fit new, unforeseen situations.

LLM Integration

The agent leverages retrieved workflows to guide decision-making, selecting actions that align with past successes. With its LLM's vast knowledge and the retrieved data, it can also adapt these workflows to fit new, unforeseen situations.

Significance of AWM

Multiple benchmark results highlight the significance of Agent Workflow Memory (AWM), demonstrating its ability to vastly outperform traditional agents on long-horizon tasks like web navigation, multi-step problem-solving, and task automation. AWM’s structured memory allows it to retrieve relevant workflows and improve task efficiency, while online induction enables dynamic adaptation in real-time scenarios.

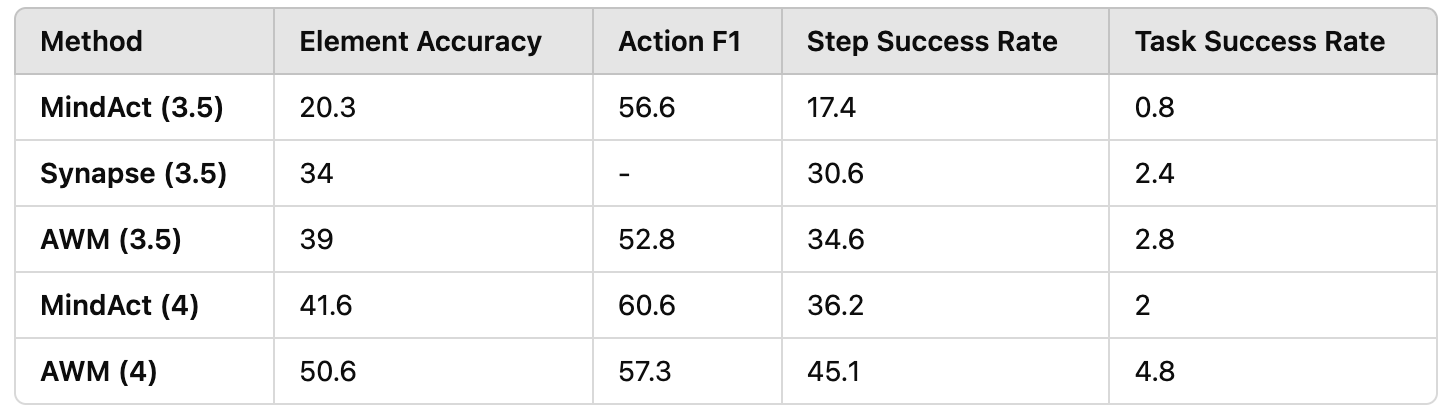

AWM’s Performance Against Baseline on WebArena

The authors of the paper conducted extensive experiments to evaluate the performance of AWM using two prominent benchmarks:

Mind2Web

Mind2Web tests web navigation agents' ability to generalize across tasks, websites, and domains. Tasks span areas like shopping and travel, requiring agents to interact with web elements step by step. Agents must select the suitable component and action (e.g., click, type), with performance measured by critical metrics:

- Element accuracy: How often the agent picks the correct web element.

- Action F1: Evaluate action accuracy on the selected element.

- Step success rate: Check if each step's element and action were correct.

- Task success rate: Measures if all steps in a task are completed correctly from start to finish.

Mind2Web Cross-Task Evaluations

Key achievements:

- Compared to other methods, AWM achieved the highest Step Success Rate (45.1% with GPT-4) and Task Success Rate (4.8%).

- AWM’s success is primarily attributed to its workflow mechanism, which reuses learned sub-tasks across different tasks, enhancing generalization and accuracy in element selection and actions.

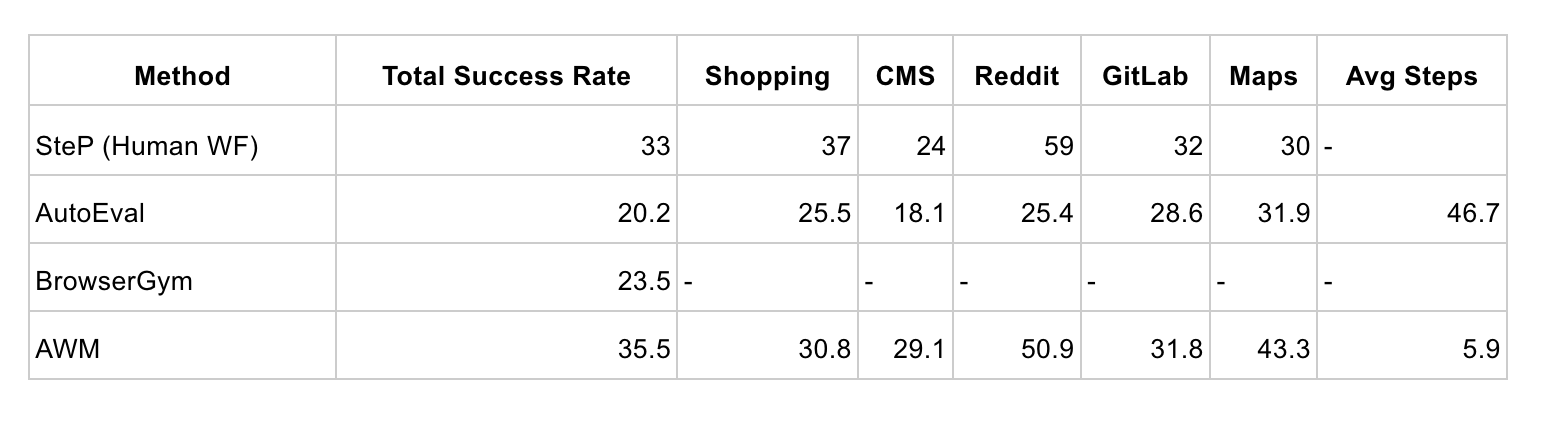

WebArena

WebArena tests agents on various website tasks, like browsing or content management. Performance is measured by Task success rate (task completion) and Average steps (efficiency in fewer steps).

WebArena (Task success rate breakdown by platform)

Key achievements:

- AWM achieved the highest overall Task Success Rate (35.5%) among all methods, showing significant improvements over the autonomous baseline methods.

- AWM outperformed the human-engineered SteP method in several domains (e.g., Reddit, Maps), highlighting its ability to induce workflows and adapt to different environments autonomously.

- AWM also reduced the Average Steps per task, indicating that it solved tasks more successfully and efficiently than other methods.

In complex situations like automated customer support or AI-driven research assistants, AWM handles intricate workflows requiring multi-stage decision-making without losing context or drifting from the original task.

Conclusion

Concluding the blog, Agent Workflow Memory significantly boosts the existing AI Agent pipeline, providing a more reliant “agent” for our precise operations and workflows. It shines above and beyond traditional AI Agents by granting them the power of memory, which, in capacity, makes them more “wise.”

Further reading

Hybrid Multimodal Memory Empowered Agents Excel in Long-Horizon Tasks